Large Language Models (LLMs) are a class of machine learning models designed to understand, generate, and manipulate natural language. These models are trained on vast amounts of text data and use complex architectures like Transformers to perform various tasks such as translation, summarization, and answering questions.

- They provide a way to scale language-based solutions across multiple industries.

- LLMs power many of today’s intelligent systems such as chatbots, language translation tools, and content generation platforms.

- Fine-tuning LLMs allows for domain-specific applications like legal document analysis, healthcare diagnostics, etc.

- Large volumes of data (terabytes or petabytes).

- Requires high computational power for processing.

- High variability in the data (text, image, audio).

- Complex relationships within data.

- Limited data availability (megabytes or gigabytes).

- Difficult to train sophisticated models due to lack of diverse information.

- Requires transfer learning or data augmentation techniques to improve accuracy.

- 2001: Learning probabilities over a sequence of words (e.g., n-grams).

- 2013: Grouping words with similar meanings based on the context (e.g., Word2Vec).

- 2015: Preserving word context by assigning weights relative to other words (e.g., Attention mechanisms).

- 2018: Learning language through exposure to large-scale corpora (e.g., the internet) to develop highly accurate LLMs (e.g., BERT, GPT).

The key idea is to create a baseline model from where specific use cases can be developed by fine-tuning or modifying existing architectures.

RNNs were developed to solve the problem of input memorization in traditional Neural Networks by using loops to remember sequences over time.

- One-to-One: Single input, single output (e.g., Image Classification).

- One-to-Many: Single input, sequence output (e.g., Image Captioning).

- Many-to-One: Sequence input, single output (e.g., Sentiment Analysis).

- Many-to-Many: Sequence input, sequence output (e.g., Machine Translation, Summarization).

- Many-to-One (One Character at a Time): Used for Sentiment Analysis, where a sequence of words is reduced to a sentiment label.

- Many-to-Many (Full Sentences): Used for tasks like Machine Translation, where full sentences are input and output in different languages.

- Many-to-Many (Full Sentences): Used for Text Summarization, where long input text is reduced to a summary.

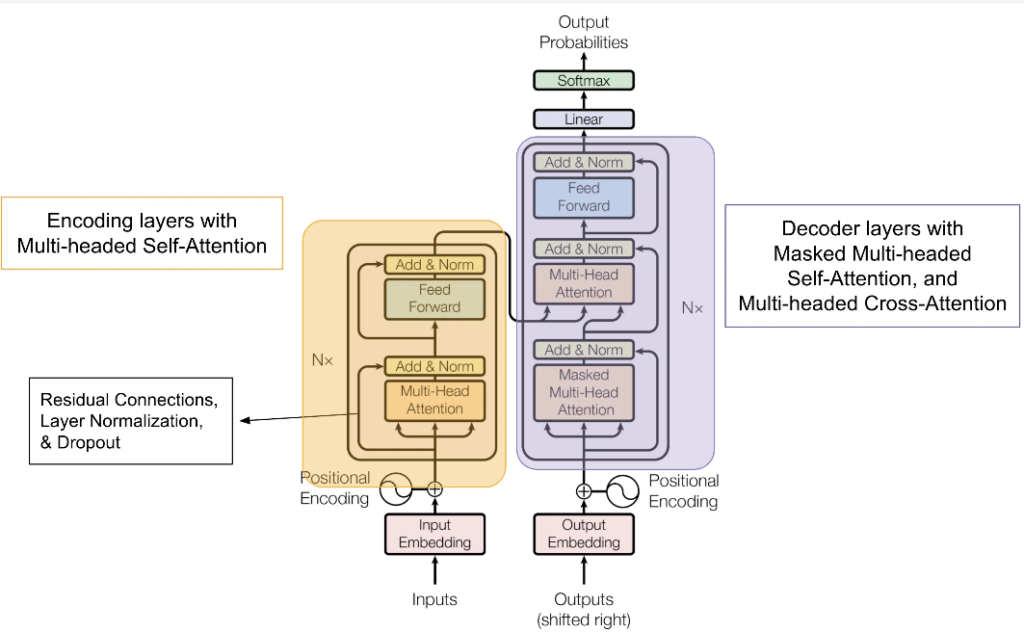

This architecture sets the standard for modern LLMs by using two networks:

- Encoder: Processes the input sequence and compresses it into a context vector.

- Decoder: Generates the output sequence from the context vector.

Diagram (Textual Representation):

This architecture is used in various applications like machine translation and summarization.

Machine Translation with RNNs: The use of RNN-based architectures in translation tasks helped model long sequences of words in different languages.

Problems with RNNs:

- Cannot be trained in parallel: Due to sequential nature, RNNs must be trained one step at a time.

- Long Range Dependency: RNNs struggle with long sequences as they tend to forget information over time.

- Sequential Processing: RNNs process sequences one word at a time, making them slower than modern architectures.

- Language Complexity: Words can have multiple meanings depending on context.

- Beyond Word Sequences: Merely memorizing word sequences isn’t enough; a model must understand the broader context in which words are used.

The Transformer architecture revolutionized NLP with its attention mechanism, allowing models to understand the entire context of a sentence by focusing on relevant parts of the input sequence. This led to faster, more accurate LLMs that can be trained in parallel and handle long-range dependencies efficiently.

Attention is All You Need

Transformers to the Rescue!

Transformers are a breakthrough architecture in NLP, designed to solve the shortcomings of RNNs and improve scalability, parallelization, and contextual understanding.

Key Advantages of Transformers:

- Scale Efficiently: They handle large datasets without the bottlenecks of RNNs or LSTMs.

- Parallelization: Unlike RNNs, transformers process words simultaneously, allowing for faster training.

- Better Contextual Learning: The self-attention mechanism allows transformers to understand the context of each word relative to the entire sentence.

Self-Attention Mechanism:

Self-attention enables the model to focus on different parts of the input sequence when encoding a specific word.

Example Sentence:

The teacher taught the student with the book.

In the self-attention mechanism, each word can “attend” to other words in the sentence. Here’s how each word might connect in the self-attention process:

- The → [teacher, taught, student, with, book]

- Teacher → [the, taught, student, with, book]

- Taught → [the, teacher, student, with, book]

- Student → [the, teacher, taught, with, book]

- With → [the, teacher, taught, student, book]

- Book → [the, teacher, taught, student, with]

The self-attention mechanism calculates the relationship between each word and all other words in the sentence to understand the full context.

Transformer Architecture

Diagram:

- Encoder: Processes the entire input sequence and generates a contextual representation for each token.

- Decoder: Uses the encoder’s output and generates the final sequence step by step, attending to both previous outputs and encoder’s representation.

Tokenization in Transformers

Transformers only work with numerical input, which means text has to be converted into numbers using a tokenizer.

Transforming Text Inputs:

For example, the sentence “The teacher taught the student” is tokenized into numerical representations:

| Token ID | Word |

|---|---|

| 220 | The |

| 432 | Teacher |

| 857 | Taught |

| 220 | the |

Each word is mapped to a specific token ID.

Types of Tokenizers

1. Word-Based Tokenization

This approach splits the sentence by words and assigns a unique token to each word.

| Token ID | Word |

|---|---|

| 220 | The |

| 432 | Teacher |

| 857 | Taught |

| 122 | the |

Example:

“Let’s Create Tokens now” would be tokenized as:

Token ID Word 120 Let’s 302 Create 417 Tokens 189 now

Limitations:

- Vocabulary Size: Large models require thousands of unique tokens.

- Unknown Words (OOV): Any word not in the vocabulary becomes a problem and is treated as “unknown.”

2. Subword-Based Tokenization

Instead of splitting words fully, it breaks words into subword units. This addresses the out-of-vocabulary (OOV) issue by breaking down unfamiliar words into known subword pieces.

For example:

- UncommonWord might be split as:

Uncommon→Un,common,Word

3. Character-Based Tokenization

This approach treats each character as a token. It can handle any input but results in longer sequences.

For example:

- “Taught” would be tokenized as:

T,a,u,g,h,t

Tokenization Example and Comparison:

Word-Based:

| Token ID | Word |

|---|---|

| 220 | The |

| 432 | Teacher |

| 857 | Taught |

Character-Based:

| Token ID | Character |

|---|---|

| 12 | T |

| 45 | h |

| 17 | e |

Subword-Based:

| Token ID | Subword |

|---|---|

| 220 | Teach |

| 122 | -er |

Conclusion

Transformers revolutionized NLP by introducing attention mechanisms, allowing models to focus on relevant parts of the input. The tokenization techniques play a crucial role in enabling these models to handle diverse language inputs efficiently. By converting words into numerical representations and applying self-attention, Transformers achieve unparalleled performance in tasks like translation, summarization, and more.

Byte-Pair Encoding (BPE)

Byte-Pair Encoding (BPE) is an iterative approach that starts from pre-tokenization and continues until an optimal vocabulary is created based on the frequency of token pairs. BPE is particularly useful for compressing the vocabulary size while still capturing frequent word patterns.

How BPE Works:

- Pre-tokenization: The sentence is first split into characters or subwords.

- Pairing based on frequency: The algorithm finds the most frequent pair of characters or subwords in the corpus and merges them into a single unit.

- Repeat: The process repeats until a predefined vocabulary size is reached.

Example:

- “The teacher taught the student” might start as:

Theteacher- BPE merges “th” to form “th” and repeats based on frequency.

SentencePiece

SentencePiece treats the input as a continuous raw data stream without assuming spaces as word separators. It applies techniques such as BPE or Unigram to segment the text.

Advantages of SentencePiece:

- Space-Free Input: It can handle languages like Japanese, Chinese, or texts with no explicit spaces.

- Versatility: It can be combined with different tokenization techniques like BPE and unigram models.

Embeddings

Embeddings transform tokens into trainable vectors in a higher-dimensional space. These vectors capture semantic meanings and relationships between words in the input.

Intuition:

- Embeddings encode the context of a token within a sentence.

- Each token is represented in a unique space, and similar words will have closer distances.

Example of Embeddings:

| Token ID | Word | Embedding Vector (Illustrative) |

|---|---|---|

| 220 | The | [0.2, 0.1, 0.4, 0.7] |

| 432 | Teacher | [0.5, 0.8, 0.2, 0.9] |

| 857 | Taught | [0.6, 0.3, 0.4, 0.8] |

| 122 | the | [0.1, 0.2, 0.5, 0.6] |

Diagram: Tokenization to Embedding and Decoder

Word Embedding Distance Diagram

Visualizing the distance between word embeddings (smaller distance indicates similar context):

— [Teacher]——[Taught]——[Student] \ / \ / [The]—————–[Book]

In the embedding space, Teacher, Taught, and Student are closer to each other, indicating they are contextually related.

Transformer Architecture

Transformers consist of two main components:

- Encoder

- Decoder

Transformers can handle complex dependencies through self-attention mechanisms, allowing the model to consider the entire sequence at once.

Encoder Internals

The encoder’s inputs first flow through a self-attention layer, allowing it to look at other words in the sentence while encoding each specific word.

Encoder Diagram:

Decoder Internals

The decoder uses both self-attention and an additional attention layer to focus on the encoder’s output, helping it generate relevant outputs.

Decoder Diagram:

Summary of Encoder and Decoder

- Encoder:

- Encodes input sequences and produces a vector per token with contextual understanding.

- Decoder:

- Accepts inputs and generates new tokens step by step, focusing on relevant input parts.

Complete Transformer Model:

Model Types

1. Encoder-Only Models

- Task: These models are used for tasks like sentiment analysis.

- Characteristic: Input and output lengths are the same.

- Example: BERT

- Use Case: Sentiment analysis, classification tasks.

2. Encoder-Decoder Models

- Task: Used for tasks like machine translation where input and output lengths differ.

- Characteristic: Input length ≠ Output length.

- Example: BERT, T5

- Use Case: Machine translation, summarization.

3. Decoder-Only Models

- Task: These models are commonly used for text generation tasks.

- Characteristic: Popular in generative models.

- Example: GPT, LLaMA

- Use Case: Text generation, chatbots, creative writing.

Generative Pretrained Transformer (GPT)

GPT models are decoder-only models consisting of stacks of decoder blocks. They use self-attention mechanisms and feed-forward networks to generate text outputs.

Key Characteristics of GPT:

- Self-attention: Helps maintain relationships between tokens and context.

- Feed-forward networks: Process the transformed input at each stage.

- Generative model: Most popular for tasks like text generation.

Diagram for GPT Model:

Conclusion

In this section, we covered:

- Byte-Pair Encoding (BPE): Tokenization strategy based on merging frequent pairs.

- Embeddings: Vector representations of tokens in high-dimensional space.

- Transformer Architecture: Comprising encoders and decoders to handle complex NLP tasks.

- Model Types: The different architectures (Encoder-only, Encoder-Decoder, Decoder-only) used for tasks like sentiment analysis, translation, and text generation.

- GPT: A generative model using decoder-only blocks for text generation.

Text Generation

In recent years, open-ended language generation has gained significant attention, largely due to the rise of large transformer-based language models like OpenAI’s ChatGPT and Meta’s LLaMA. These models are trained on massive corpora, including millions of webpages, and are capable of handling a variety of tasks. They can generalize to unseen tasks, process code, and even take non-textual data as input.

Alongside improvements in transformer architecture and the use of massive unsupervised training data, better decoding methods have also played a crucial role in generating coherent and contextually appropriate text.

Decoding Methods

1. Greedy Search

Greedy Search is the simplest decoding method for text generation. In this method, the model selects the word with the highest probability at each step to generate the next word.

- Pros: Simple and fast.

- Cons: Can result in suboptimal text as it does not account for the global context of the entire sentence. The model might miss better sequences with slightly lower immediate probabilities.

Example:

Suppose we are generating a sentence starting with: “The cat is”

- Greedy Search might choose: “The cat is sleeping” (because “sleeping” had the highest probability after “is”).

2. Beam Search

Beam Search improves on Greedy Search by considering multiple hypotheses at each step. It keeps track of the num_beams most likely sequences and eventually selects the one with the highest overall probability.

- num_beams: This determines how many different hypotheses are kept at each time step.

- Pros: Reduces the risk of missing high-probability word sequences.

- Cons: Computationally expensive and can still generate repetitive or dull text.

Illustration: Beam Search with num_beams = 2

Let’s assume we’re generating text starting with: “The cat is”

| Time Step 1 | Time Step 2 | Hypotheses |

|---|---|---|

| The | The cat is sleeping | Hypothesis 1 (chosen) |

| The | The cat is purring | Hypothesis 2 (next best) |

Beam Search keeps two possibilities (“sleeping” and “purring”), and based on further probabilities, it may choose the best one in subsequent steps.

3. Sampling

Sampling refers to randomly selecting the next word from the probability distribution predicted by the model.

- Pros: Adds variability and diversity to text generation.

- Cons: The generated text can be incoherent or irrelevant, especially with highly uncertain models.

Example:

If the model provides probabilities like:

- “The cat is” ->

0.4: sleeping,0.3: purring,0.2: running,0.1: barking

Sampling might randomly select “purring” or “running,” adding diversity compared to deterministic methods like Greedy Search.

4. Top-K Sampling

In Top-K Sampling, instead of considering all possible words in the vocabulary, the top K most likely words are chosen, and the probability mass is redistributed among them.

- Pros: Filters out unlikely candidates, making the generation more controlled.

- Cons: If K is too small, it might lead to repetitive or overly constrained text.

Example:

- K = 3, Top 3 words:

"sleeping","purring","running" - The model will then randomly sample from these three words.

GPT-2 uses Top-K Sampling, which helped improve its performance in story generation and other creative tasks.

5. Top-P (Nucleus) Sampling

Top-P Sampling (also known as Nucleus Sampling) dynamically adjusts the number of words to sample from. Instead of choosing a fixed K, it selects the smallest set of words whose cumulative probability exceeds P (e.g., 90%). The probability mass is then redistributed among this set of words.

- Pros: Balances the strengths of both greedy and sampling methods. It can handle highly predictable and highly uncertain distributions more flexibly.

- Cons: Slightly more complex to implement but often provides better results in open-ended tasks.

Example:

- P = 0.9: This means that the model will sample from the smallest set of words whose total probability is greater than 90%.

- If “sleeping” = 0.4, “purring” = 0.3, “running” = 0.2, then these three words will be selected, and one will be sampled based on their probabilities.

Which Decoding Method is Best?

The choice of decoding method depends on the specific task and desired outcome:

- Greedy Search: Fast and simple, but often leads to repetitive or low-quality output. It’s best for constrained tasks where precision matters over creativity.

- Beam Search: Widely used for translation tasks or tasks where coherence is critical, but it can produce boring or repetitive text.

- Sampling: Adds randomness and creativity but can lead to incoherent results.

- Top-K Sampling: A more controlled version of sampling, useful for creative applications like story generation.

- Top-P Sampling: Often seen as the best compromise. It dynamically adjusts the set of candidate words, allowing for both control and diversity in text generation. It is highly favored for open-ended generation tasks like storytelling or dialogue systems.

Recommendation:

- Top-P Sampling is generally preferred for open-ended text generation (e.g., stories, dialogue) due to its dynamic selection of tokens and flexibility.

- Top-K Sampling is also a popular choice for creative tasks, such as GPT-2’s story generation.

Example: Text Generation Using Different Decoding Strategies

Refer to the Python notebook text_generation.ipynb in this repository to see how different decoding strategies are applied for generating text using transformer models.

In the notebook, you will find:

- Implementation of Greedy Search, Beam Search, Top-K Sampling, and Top-P Sampling.

- A detailed comparison of the generated text using each method.

- Guidelines on how to tweak the parameters (like

num_beams,K, orP) for your own tasks.

Conclusion

Understanding how different decoding methods work is critical when fine-tuning large language models for text generation tasks. Each method has its pros and cons, and the choice of method will depend on the balance between coherence, diversity, and computational resources.

For more hands-on examples and code implementations, please check the notebook.

Transformers revolutionized NLP by providing efficient, parallelizable architectures capable of handling long-range dependencies in language. GPT models, in particular, have emerged as the leading choice for text generation tasks.

+--------------------+

| Define Use Case |

+--------------------+

|

v

+-----------------------+

| Select Existing or |

| Pre-train Model |

+-----------------------+

|

v

+------------------------+

| Adapt and Align |

| Model |

+------------------------+

|

v

+-----------------------+

| Evaluate |

+-----------------------+

|

v

+-----------------------+

| Application Integration|

+-----------------------+

|

v

+-----------------------+

| Optimize and Deploy |

| for Inference |

+-----------------------+

|

v

+-----------------------+

| Augment Model & Build |

| LLM-powered Apps |

+-----------------------+

Detailed Explanation of Each Step:

The first step in the LLM lifecycle is to clearly define the use case for which the model will be used. This could be anything from text generation, sentiment analysis, code generation, machine translation, etc.

- Example: “Developing an AI-powered chatbot for customer support.”

Key Questions:

- What specific task or problem are we solving?

- Is there a need for creativity, accuracy, or domain-specific knowledge?

At this stage, you decide whether to:

- Use a Pre-trained Model: Choose from existing models such as GPT, BERT, or LLaMA, which are already trained on vast datasets.

- Pre-train from Scratch: In cases where domain-specific knowledge is critical, you might consider training your own model from the ground up using your own datasets.

Factors to Consider:

- Availability of existing models that match your needs.

- Computational resources and dataset availability for pre-training.

This is where the model is refined to better suit the specific use case:

Prompt Engineering: Crafting the right prompts that help the model perform optimally on the intended tasks.

- Example: For a translation task, you might use prompts like, “Translate this sentence from English to Spanish.”

Fine-Tuning: Fine-tune the pre-trained model on a specific dataset to improve performance on the task at hand.

- Example: Fine-tuning GPT-3 on customer service dialogues to create a more reliable chatbot.

Human Feedback in the Loop: Incorporating human feedback to continuously improve the model’s performance.

- Example: Using reinforcement learning from human feedback (RLHF) to adjust outputs based on human preferences.

This stage involves testing the model on various metrics to ensure it is performing as expected. Evaluation could include:

- Accuracy: How correct are the model’s predictions?

- Relevance: How relevant are the generated outputs to the given prompt or query?

- Bias and Fairness: Is the model producing any biased or unfair outcomes?

Metrics for Evaluation:

- Precision/Recall for classification tasks.

- BLEU score for translation tasks.

- Human evaluation for creative tasks like text generation.

Once the model is fine-tuned and evaluated, it is ready for integration into real-world applications. This involves:

Optimize and Deploy for Inference: Ensuring the model is optimized for quick and efficient inference (i.e., making predictions or generating text).

- Example: Optimizing GPT-based chatbots for real-time customer queries.

Augment Model and Build LLM-powered Applications: Building applications that leverage the power of LLMs. These could be applications like:

- AI-powered writing assistants (e.g., Grammarly).

- Automated coding tools (e.g., GitHub Copilot).

- Conversational AI systems (e.g., ChatGPT, Alexa).

- Define the Use Case: Understand the problem to solve or task to perform.

- Select: Choose an existing model or decide to pre-train a new one.

- Adapt and Align the Model: Use techniques like prompt engineering, fine-tuning, and human feedback to refine the model.

- Evaluate: Test the model for accuracy, relevance, and fairness.

- Application Integration: Optimize and deploy the model, then use it to power real-world applications.

By following this process, you can effectively leverage LLMs for a wide range of use cases, from simple text generation tasks to complex, domain-specific applications. For more hands-on examples and code, refer to the accompanying Python notebook in this repository.

Understanding the various types of pre-training approaches for Large Language Models (LLMs) is key to choosing the right model architecture for your use case. Each approach serves specific tasks and operates on different objectives depending on whether the model is encoder-based, decoder-based, or both.

Example:

Original Text: “The teacher teaches the student.”

Span Corruption:

During pre-training, part of the input sequence is masked or corrupted, and the model is trained to reconstruct the missing spans.

Masked Input:[The teacher <MASK> <MASK> student]

The Encoder-Decoder LLM tries to predict the missing tokens.

Prediction:[The teacher <X> student]

Where <X> represents the corrupted token (e.g., a sentiment or missing word).

- Translation

- Text Summarization

- Question Answering

- T5

- BART

Original Text: “The teacher teaches the student.”

Masked Language Modeling (MLM):

Here, specific words are masked, and the model is trained to reconstruct the entire sequence by predicting the masked word(s).

Masked Input:[The teacher <MASK> the student]

Objective:

Reconstruct the original text by filling in the masked words.

Bidirectional Context:

Autoencoding models use bidirectional context to understand both left and right contexts of the token being predicted.

The | teacher | teaches | the | student

-> -> <- <-

- BERT

- RoBERTa

- Sentiment Analysis

- Named Entity Recognition (NER)

- Word Classification

Original Text: “The teacher teaches the student.”

Causal Language Modeling (CLM):

Autoregressive models predict the next word in a sequence based on the previous words.

Input:[The | Teacher | ? ]

The model’s goal is to predict the next token.

Objective:

Predict the next word in the sequence (e.g., “teaches”).

Unidirectional Context:

These models use only the left context to predict the next token.

The | teacher | teaches

-> -> ->

- GPT

- BLOOM

- Text Generation

- Zero-shot Inference

Given an original text: “The teacher teaches the student.”

- Autoencoding Models (MLM): Masked Language Modeling for reconstructing the original text.

- Autoregressive Models (CLM): Causal Language Modeling for predicting the next token.

- Sequence-to-Sequence Models: Use span corruption to reconstruct masked parts of a sequence.

Pre-training models for domain-specific language, such as legal or medical language, ensures that the model understands the jargon and specific nuances of each field.

- Legal Language Example:

- “The prosecutor had difficulty proving mens rea, as the defendant seemed unaware that his actions were illegal.”

- Medical Language Example:

- “After the biopsy, the doctor confirmed that the tumor was malignant and recommended immediate treatment.”

Training models on specific domain data enhances their accuracy and relevance in those fields, allowing them to perform better on tasks such as legal document analysis or medical diagnosis recommendations.

In this phase, we adapt and customize a pre-trained foundational model to meet specific business or task-related objectives. This involves several techniques, including prompt engineering, fine-tuning, and incorporating human feedback to improve the model’s accuracy and relevance.

Prompt Engineering is the practice of crafting precise and targeted input prompts that help the model understand what is expected from it. Since foundational models like GPT or BERT have been trained on a wide variety of data, they can perform many tasks if prompted correctly.

Goal: Make the model perform specific tasks without changing its core architecture.

Approach: Instead of retraining the model, you experiment with different ways to phrase your inputs.

Example:

- Task: Translate English to Spanish

- Prompt: “Translate the following sentence from English to Spanish: ‘The teacher teaches the student.'”

By carefully designing the prompt, you can guide the model to produce the desired output.

Fine-tuning involves training a pre-trained model on a specific, smaller dataset to adapt it for a particular task. It allows the model to specialize while retaining its general capabilities.

Goal: Improve the model’s performance on domain-specific tasks by adjusting the weights learned during pre-training.

Approach: The model is exposed to examples from the target domain (e.g., legal documents, medical records) and adjusts its internal parameters to optimize performance for that task.

Example:

- A foundational model (e.g., BERT) is fine-tuned on a dataset of legal texts to improve its ability to interpret and process legal language.

Human feedback is essential to ensuring that the model outputs are aligned with human expectations, especially for tasks like creative text generation, dialogue systems, or decision-making.

Goal: Continuously improve the model’s behavior based on human judgment.

Approach: Use techniques like Reinforcement Learning with Human Feedback (RLHF) to incorporate human evaluations into the training loop.

Example:

- A chatbot is designed to answer customer queries. After each response, humans rate the quality of the answers, and the model updates its parameters based on this feedback, improving future responses.

Evaluation is the process of testing the model’s effectiveness after adaptation. It involves measuring the model’s performance against relevant metrics such as accuracy, relevance, fairness, and bias.

Goal: Ensure the model meets the necessary requirements for the given task.

Metrics: Accuracy, Precision, Recall, BLEU score (for translation), or human evaluations for creative tasks.

Example:

- After fine-tuning a sentiment analysis model, evaluate it on a dataset of customer reviews to check its accuracy and consistency in classifying sentiments (positive, negative, or neutral).

When adapting models, two primary techniques are employed:

- Fast, requires no retraining of the model.

- Allows you to experiment with different formulations of input to achieve the desired output.

- Requires retraining on a specific dataset but allows for greater customization.

- Fine-tunes the model’s parameters to enhance task-specific performance.

The process of adapting a foundational model can be visualized as a layered cake where each layer represents a different level of customization and business value. Moving between layers involves trade-offs in terms of data quality and data cost.

Preference Layer

- Goal: Achieve fine-grained behavior based on human feedback (e.g., chatbot conversations, creative writing tasks).

- Business Value: High

- Effort: Low data requirement, but high dependency on human feedback.

Example: A conversational agent is continuously trained based on user feedback to produce more relevant and satisfying answers.

Task Layer

- Goal: Perform specific tasks such as Sentiment Analysis, Question Answering, Text Summarization, etc.

- Business Value: Medium

- Effort: Requires some domain-specific data to train the model.

Example: A sentiment analysis model is trained to classify customer feedback into positive, neutral, and negative categories.

Foundational Layer

- Goal: Learn patterns and relationships in large amounts of data.

- Business Value: Low (for specific tasks), High (as a general foundation).

- Effort: Trained on large, generic datasets like Wikipedia, books, and web pages.

Example: GPT, BERT are foundational models that learn general language representations.

Foundational Layer

- Goal: Learn general patterns in a wide variety of datasets.

- Data Cost: High due to the large dataset and computational resources required for training.

- Business Value: Low for specific applications but critical for model generalization.

Task Layer

- Goal: Perform specific tasks (e.g., Text Summarization, Q&A, etc.).

- Data Cost: Medium. Fine-tuning on a smaller, domain-specific dataset.

- Business Value: Medium.

Preference Layer

- Goal: Fine-tuned behavior based on human preferences and feedback.

- Data Cost: Low, but human feedback loops are critical for fine-grained control.

- Business Value: High.

When customizing models, it’s essential to balance data quality and cost. The Top-Down Approach requires less data but may suffer from a reduction in general data quality. Conversely, the Bottom-Up Approach requires more data and computational resources but results in more robust and fine-tuned models for specific tasks.

- Prompt Engineering: Fast and requires minimal effort, but limited customization.

- Fine-Tuning: More effort and data-intensive but allows greater specialization for the target task.

- Human Feedback: Continuous refinement of the model’s output to match human expectations.

- Layered Cake Approach: Balancing data quality, business value, and effort as you move up or down the stack of model customization.

Prompt engineering is a key part of adapting Large Language Models (LLMs) for specific tasks. Essentially, it involves crafting inputs—written in plain language—that guide the model to produce desired outputs.

The anatomy of a well-crafted prompt involves several components that help shape the response and guide the model effectively.

Context / Background

Set up a clear background or scenario. This helps the model understand the role it should take or the specific expertise required.Example:

“You are a friendly and helpful teaching assistant.”Instructions

Provide specific instructions on how the model should perform the task. This can include stylistic guidelines (e.g., “Use simple language suitable for an 8-year-old.”) or formatting preferences.Example:

“Explain the concept using simple terms and provide an example at the end.”Task

Clearly define what task the model is expected to perform. Whether it’s solving a problem, completing a sentence, or answering a question, be explicit about the desired output.Example:

“Explain what fractions are.”Setup

You can further enhance the prompt by providing any setup that helps clarify the task or intent. This can include role-playing scenarios, providing the model with an expert identity, or giving examples.Example:

“You are an expert nutritionist, and I want you to provide answers in bullet points.”Output Format

Specify how the response should be structured. This might include producing answers in bullet points, a table, or even a piece of code.Example:

“Provide the answer in bullet points.”

When designing prompts, the goal is to clearly communicate what is expected from the model. Some common tasks you may design prompts for include:

Create Text: Generate new content based on provided inputs.

- Example: “Write a paragraph about the benefits of regular exercise.”

Summarize: Condense large pieces of text into shorter summaries.

- Example: “Summarize this article in three sentences.”

Respond to Input: Offer responses based on user questions or instructions.

- Example: “Answer the following question: ‘What are the causes of climate change?'”

Complete the Text: Provide the missing parts of a sentence or paragraph.

- Example: “The teacher teaches the student, but…”

Solve: Generate solutions to problems, whether they’re mathematical, logical, or creative.

- Example: “Solve for X in the equation: 3X + 5 = 20.”

Answer: Provide direct answers to specific questions.

- Example: “What is the capital of France?”

Once you’ve crafted a prompt, it’s important to refine it by evaluating the output. If the response is not what you expected, you may need to tweak the instructions, rephrase the task, or adjust the context.

- Validate: Check if the output meets the expectations. If not, identify where it diverges from the intended goal.

- Change: Modify the language, add more examples, or provide clearer instructions.

- Ask Follow-Up Questions: Prompt the model to respond with clarifications or additional information. This helps to get more accurate or detailed responses.

| Component | Example 1 | Example 2 |

|---|---|---|

| Context | You are an expert nutritionist. | You are a friendly and helpful teaching assistant. |

| Instruction | I want you to provide answers in bullet points. | You explain concepts in great depth using simple terms. |

| Task | Create a meal plan for 2000 calories a day. | Explain what are fractions. |

| Output | – Item 1 – Item 2 – Item 3 | Sure! Fractions are a way of representing a part of a whole… |

In-Context Learning is a powerful feature of large language models where the model learns from the examples provided within the same prompt, without needing explicit fine-tuning. Essentially, the model can infer patterns from the given examples and apply them to new tasks within the same context.

Zero-shot inference is a technique where the model is given a task it hasn’t been explicitly trained on and is expected to complete it correctly. It requires no prior training on task-specific data and instead uses the inherent knowledge the model has from its pre-training.

In this scenario, the prompt asks the model to classify a review’s sentiment without having seen any prior examples:

Here, the model correctly classifies the sentiment as “Positive” without needing explicit instruction or labeled training data for this specific task.

Prompt engineering is essential to getting the best results from LLMs. By breaking down prompts into clear components—context, instructions, task, setup, and output format—you can optimize model performance for various tasks like text generation, summarization, and question-answering. After crafting the prompt, continually refine it to improve its effectiveness.

In-Context Learning (ICL) allows the model to learn from the examples provided in the prompt without explicit training. Zero-shot inference, in particular, demonstrates how models can tackle new tasks they haven’t been explicitly trained on by using their pre-existing knowledge.

Refer the notebook for reference

Description:

TO-SF focuses on tasks requiring understanding and generating semantically meaningful text based on specific questions or prompts. The tasks in this category include commonsense reasoning, question answering (QA), title generation, and more.

Real-World Examples:

- Virtual Assistants (e.g., Google Assistant, Amazon Alexa): These systems use commonsense reasoning to answer user queries, generate text based on voice commands, or extract relevant information to respond to follow-up questions. For instance, answering “What’s the weather like in New York?”

- Customer Support Chatbots: These chatbots use extractive QA and context generation to answer questions about products or customer complaints by retrieving information from manuals or FAQs.

Task Breakdown:

- Commonsense Reasoning: Applied in predicting everyday knowledge, such as “If you leave ice in the sun, it will melt.”

- Question Generation: Used in educational tools to automatically generate practice questions from textbooks.

Description:

Muffin task sets include tasks like language inference, program synthesis, and code repair, useful in technical domains like coding, troubleshooting, and conversational AI systems.

Real-World Examples:

- Programming Assistance (e.g., GitHub Copilot): Muffin tasks help generate code snippets, fix errors, and offer suggestions during development.

- Technical Support: Models can generate troubleshooting steps or offer code repair suggestions in real-time for customers reporting software issues.

Task Breakdown:

- Code Repair: Used in debugging tools to identify and fix faulty code.

- Conversational QA: Applied in customer support to provide real-time solutions for technical queries.

Description:

CoT focuses on reasoning-based tasks like arithmetic reasoning and commonsense reasoning, breaking down complex problems into logical steps.

Real-World Examples:

- Mathematics Tutoring: CoT models break down math problems step-by-step in educational tools, guiding students through solutions.

- Legal and Financial Advisors: CoT reasoning helps explain complex legal and financial concepts in digestible steps for clients.

Task Breakdown:

- Arithmetic Reasoning: Used in financial applications for calculating things like loan interest or savings growth.

- Implicit Reasoning: In legal tech, implicit reasoning helps summarize key points from complex documents.

Description:

Natural Instructions v2 includes a variety of tasks such as text classification, question generation, cause-effect classification, and named entity recognition (NER). These tasks are pivotal in natural language understanding applications.

Real-World Examples:

- Text Moderation: Social media platforms use this for detecting toxic language and harmful content.

- Medical and Legal Document Processing: NER is used to extract names, dates, and specific terms from documents quickly, aiding hospitals and law firms.

Task Breakdown:

- Named Entity Recognition (NER): Applied in legal tech for identifying names, dates, and locations in documents.

- Cause-Effect Classification: Used to analyze relationships in reports, such as scientific literature or disaster assessments.

Held-out tasks evaluate model performance by testing them on tasks they haven’t seen during training, ensuring generalization to new, unseen data.

Description:

MMLU consists of subject-based tasks in domains like medicine, philosophy, and law. These tasks assess a model’s specialized knowledge.

Real-World Examples:

- Legal Research: MMLU-trained models can analyze complex legal documents and provide relevant case law or summaries.

- Medical Decision Support: Models trained on MMLU can assist doctors by analyzing patient data and recommending treatments.

Task Breakdown:

- College Medicine Tasks: Used in educational platforms for medical students, where the model generates explanations for anatomy or physiology questions.

Description:

BBH includes complex problem-solving tasks, often involving logical reasoning and abstract challenges designed to test the limits of a model’s reasoning abilities.

Real-World Examples:

- Complex Problem Solving: Used in financial markets to evaluate stock trends or analyze portfolios using logical analysis.

- Research Tools: In scientific research, BBH models can solve complex data analysis problems, predicting experimental outcomes.

Task Breakdown:

- Dyck Language Tasks: Useful in programming for understanding nested functions or logical expressions in algorithm design.

Description:

TyDiQA focuses on information-seeking question answering across multiple languages, testing cross-linguistic understanding.

Real-World Examples:

- Multilingual Customer Support: TyDiQA-trained models handle queries in multiple languages, helping global companies respond to customers in their native language.

- Cultural and Language Understanding: Governments and NGOs use these systems to gather data in multiple languages, aiding communication in diverse populations.

Task Breakdown:

- Multilingual QA: Used in international customer service, providing accurate answers across languages.

Description:

MGSM focuses on solving basic grade-school-level math problems, such as arithmetic, across different languages.

Real-World Examples:

- Online Tutoring: Educational platforms like Khan Academy use MGSM to help children solve math problems with step-by-step guidance.

- Interactive Learning Toys: Smart learning devices use MGSM to answer simple math questions for kids, providing interactive learning experiences.

Task Breakdown:

- Grade-School Math Problems: Applied in children’s educational apps to explain simple math in a step-by-step manner.

Consider a medical virtual assistant:

- TO-SF handles answering common medical questions like “What are the symptoms of diabetes?”

- Muffin provides technical troubleshooting (e.g., fixing bugs in the assistant itself).

- CoT Reasoning breaks down complex diagnoses for patients.

- Natural Instructions v2 classifies patient queries and detects toxic language.

- Held-out Tasks (MMLU) verify the assistant’s accuracy in medical knowledge, ensuring accurate domain-specific responses.

In this workflow, the virtual assistant combines fine-tuned models for regular tasks with held-out evaluations to ensure accuracy and robustness in new situations.

In-Context Learning (ICL) refers to the method where large language models (LLMs) make predictions by providing multiple examples in the input prompt to guide the model’s output. Although powerful, ICL has some limitations:

May Not Work for Smaller Models

Smaller language models may struggle to generalize from examples presented within the context window, especially if the task is complex or requires deep reasoning.Example:

A smaller model might fail to accurately classify the sentiment of reviews (e.g., “I love this product!”), because it lacks the capacity to learn the pattern from just the provided examples.Examples Take Up Space in the Context Window

In-context learning relies heavily on including examples within the model’s context window. However, the size of this window is limited, and including more examples means less space for actual task-specific content.Example:

When classifying multiple reviews in a single prompt (e.g., “I love this movie” → Positive, “This chair is uncomfortable” → Negative), each example consumes space, leaving less room for processing new, unseen reviews. This makes it harder to scale ICL to larger tasks.

- Even with multiple examples, there’s a limit to how much the model can learn or retain within the context window.

- These limitations mean that ICL may not be scalable or efficient for larger datasets or more intricate tasks.

Fine-tuning is the process of customizing a pre-trained large language model (LLM) by training it on a specific dataset. This enables the model to learn from new data and improve performance on specific tasks.

- Fine-tuning eliminates the need to include multiple examples in the prompt because the model internalizes the task-specific patterns during training.

- The entire context window can now be dedicated to the task at hand, making the model more efficient and capable of handling larger, more complex inputs.

Suppose we need to classify product reviews as positive or negative. Instead of relying on the model to infer the task from multiple examples (as in ICL), we fine-tune the model using thousands of labeled examples from the product review domain.

Before Fine-Tuning:

- Input: “Classify this review: I loved this product!”

- Output (ICL): “Sentiment: Positive” (But this may require multiple examples in the prompt).

After Fine-Tuning:

- Input: “I loved this product!”

- Output: “Positive” (The model now understands the task directly without needing examples in the prompt).

- Increased Efficiency: Fine-tuned models require less input prompt engineering since they already know the task.

- Better for Specialized Tasks: Models can be fine-tuned for niche domains like medical diagnosis or legal text analysis, improving performance on those specific tasks.

- Scalability: Fine-tuning allows models to handle large-scale tasks where in-context learning might struggle due to space constraints.

By combining in-context learning for quick tasks and fine-tuning for specialized tasks, we can optimize the performance of LLMs across a wide range of real-world applications.

Fine-tuning Large Language Models (LLMs) is a process of adapting a pre-trained model to specific tasks or domains by providing additional task-specific data. This process helps to customize the model and improve its performance in specialized tasks or domains.

Pre-trained LLM

- LLMs are typically trained on massive amounts of unstructured textual data, which includes various types of content (books, websites, articles, etc.).

- This stage of training results in a general-purpose language model that understands language patterns but is not tailored for any specific task.

Example:

- The initial pre-training phase may involve training on gigabytes to petabytes (GB-TB-PB) of text without any specific labeling, which helps the model to understand general language structures.

Task-Specific Data for Fine-Tuning

- For fine-tuning, the pre-trained LLM is exposed to specific textual data that is more structured and labeled for the task at hand.

- The data used here is generally much smaller compared to the massive corpus used for pre-training, but it is highly relevant to the task (e.g., labeled text for sentiment analysis, question-answer pairs, etc.).

Example:

- Suppose we want the model to perform sentiment analysis. The fine-tuning data would include sentences like:

- Text: “I love this product!”, Label: “Positive”

- Text: “This is the worst service!”, Label: “Negative”

Fine-Tuned LLM

- The result of the fine-tuning process is a model that retains the general language understanding from pre-training but is now also highly specialized in the given task.

- This fine-tuned LLM can now more accurately perform the specific task it was trained for (e.g., sentiment analysis, customer support, medical text classification).

Pre-training Phase

During this phase, the LLM is trained on an enormous quantity of unstructured data. This data comes from various sources such as:- Articles

- Websites

- Books

- Conversations This step helps the LLM learn the general structure and patterns of language, but the model remains domain-agnostic. It can understand language, but it isn’t particularly good at any one specific task.

Fine-tuning Phase

Once the LLM has completed pre-training, the next step is fine-tuning. This involves training the model further on a specific dataset that is more targeted and structured for a particular task.- The input data now includes labeled pairs where each text entry is associated with the correct output or label.

- This allows the model to adapt its language understanding to specific patterns, improving performance on the desired task.

- Fine-tuning also requires fewer computational resources compared to pre-training, as the datasets are smaller but more focused.

Final Output

The fine-tuned model is now optimized for performing a particular task, like text classification, sentiment analysis, code generation, etc. This final model benefits from both the broad language knowledge it acquired during pre-training and the specific expertise it gained during fine-tuning.

Customer Support Bot:

- A company might start with a pre-trained LLM that understands general language.

- The company fine-tunes the LLM using its own customer support conversations labeled by category (e.g., “Technical Issue,” “Billing Issue,” etc.).

- The fine-tuned model can now more effectively handle customer inquiries by accurately identifying and addressing specific issues.

Medical Text Analysis:

- An LLM is pre-trained on a broad dataset of general texts.

- It is fine-tuned using medical journals and research papers labeled with medical conditions and outcomes.

- The fine-tuned model can now perform tasks like identifying symptoms from patient notes or classifying medical documents.

- Pre-trained LLMs provide a broad understanding of language by being trained on vast, unstructured datasets.

- Fine-tuning enables these models to specialize in specific tasks by exposing them to labeled and task-specific data.

- This combination of general language understanding and task-specific knowledge allows fine-tuned models to outperform general models on specialized tasks.

In the evolving landscape of machine learning, fine-tuning large language models (LLMs) has gained prominence as a critical way to adapt pre-trained models to specific tasks or domains. Fine-tuning methods like PEFT, LoRA, Soft Prompts, and RLHF have emerged to optimize performance, often with fewer computational resources. In this document, we explore these methods in detail and explain how they can be applied in real-world scenarios.

Parameter-Efficient Fine-Tuning (PEFT) is a fine-tuning strategy designed to optimize the adaptation of large models by modifying only a small fraction of their parameters. This is beneficial when there are limited computational resources or small datasets.

- Reduced Computational Load: Only a small subset of parameters is updated.

- Memory Efficiency: Consumes less memory, making it feasible to fine-tune on smaller hardware.

- Faster Fine-Tuning: Reduces the time needed to fine-tune.

- Prevents Overfitting: Helpful when there is limited labeled data.

- Adapter Layers: Additional layers introduced between existing layers of the model.

- LoRA (Low-Rank Adaptation): Explained below.

- Prefix Tuning: Adds specific tokens to the input to focus the model on a task.

- Prompt Tuning: Fine-tunes special “soft prompts” for the task.

- Domain Adaptation: Fine-tune general LLMs to specific industries like healthcare, legal, and finance.

- Multi-Task Learning: Efficiently adapt one model to perform multiple tasks, such as text generation, question answering, and sentiment analysis.

LoRA is a technique designed to reduce the number of trainable parameters during fine-tuning by approximating the model’s weight matrices using smaller, low-rank matrices.

- Instead of updating all model weights, LoRA decomposes large matrices into smaller, low-rank matrices.

- Only these smaller matrices are fine-tuned, significantly reducing computational costs.

Consider a company that has a general-purpose language model, but wants to fine-tune it for specific industries such as finance or legal. Using LoRA, they can adjust just a fraction of the parameters to specialize the model for financial language without retraining the entire model.

Key Benefits:

- Lower Computational Cost: By fine-tuning only low-rank matrices, fewer parameters need to be updated.

- Versatility: Adapt a single model for multiple use cases across different industries.

Soft Prompts refer to the technique where instead of tuning model parameters, small additional input tokens (the “prompts”) are fine-tuned to guide the model.

- Soft Prompts are not hardcoded text prompts but learned, task-specific inputs.

- These inputs guide the model on how to respond to particular queries or tasks without altering its internal layers.

Imagine a chatbot being fine-tuned for different companies—each company might have its own style of customer communication. Soft Prompts can be fine-tuned to nudge the chatbot towards more formal or casual language, depending on the company, without having to retrain the entire model.

Key Benefits:

- Customization without Re-training: Modify the behavior of models without changing core parameters.

- Less Resource-Intensive: Ideal for task-specific adaptation.

Reinforcement Learning from Human Feedback (RLHF) leverages human input to guide the fine-tuning of models. Human evaluators provide feedback on the outputs generated by the model, which is then used to train the model through reinforcement learning techniques.

- Step 1: The model generates outputs.

- Step 2: Human evaluators rank or score these outputs based on criteria like usefulness, accuracy, or fluency.

- Step 3: These rankings serve as rewards in the reinforcement learning algorithm, guiding the model to improve over time.

A company that develops AI customer service agents might use RLHF to ensure that the chatbot provides answers that are both accurate and in line with company policies. Human evaluators could provide feedback on responses, and the model would adapt based on this feedback.

Key Benefits:

- Human-Centered Model Training: Models are fine-tuned to human preferences, leading to more natural, helpful, and accurate outputs.

- Better Alignment: Models become more aligned with real-world human expectations and ethical considerations.

Human Ranking is a method where human annotators rank the outputs of a model based on their quality. It is used to fine-tune models by providing structured, ranked data for supervised learning or reinforcement learning algorithms.

- Step 1: The model generates several outputs for a given task.

- Step 2: Human evaluators rank these outputs from best to worst.

- Step 3: The model learns from these rankings to improve future outputs.

Human ranking is frequently used in search engines. For example, evaluators may rank how well search results match a user’s query, helping fine-tune the algorithm to show better results in the future.

Key Benefits:

- Refining Outputs: Human insight helps to ensure model outputs are aligned with real-world expectations.

- Improved User Experience: Models become more user-friendly by integrating human preferences directly.

Pairwise ranking fine-tuning is an extension of human ranking where pairs of outputs are compared, and the better one is selected. The model is then trained to consistently prefer the higher-quality outputs.

- Step 1: Two model outputs are generated for the same input.

- Step 2: Human annotators select the superior output.

- Step 3: The model learns from these pairwise comparisons to improve future performance.

In recommender systems like Netflix or Amazon, pairwise ranking fine-tuning can be used to rank movie or product suggestions. If one recommendation is preferred over another, the model learns to rank similar content higher in the future.

Knowledge Grounding refers to providing models with access to external sources of verified information during the fine-tuning or inference process. This helps models generate factually accurate and contextually relevant responses.

- Step 1: The model is given access to a knowledge base, like a database of verified facts or a set of articles.

- Step 2: The model uses this external knowledge during fine-tuning to improve the accuracy of its outputs.

In medical applications, LLMs can be fine-tuned using a knowledge base of verified medical literature. When the model generates responses, it pulls from this knowledge base to ensure the accuracy and reliability of its outputs.

Key Benefits:

- Improved Accuracy: Models are less likely to “hallucinate” incorrect information.

- Enhanced Reliability: Outputs are grounded in real-world facts, making them more useful in professional domains.

Fine-tuning large language models has evolved into an efficient process with the advent of techniques like PEFT, LoRA, Soft Prompts, RLHF, and Human Ranking. These methods allow for greater customization, better performance, and lower resource consumption. Each method has its unique advantages, allowing businesses and developers to tailor LLMs for specific tasks or domains efficiently.

Key Techniques in Fine-Tuning:

- PEFT: Reduces the need to fine-tune all model parameters, making it more efficient.

- LoRA: Fine-tunes low-rank matrices to optimize memory and computational cost.

- Soft Prompts: Guides model behavior without changing internal weights.

- RLHF: Uses human feedback to train models through reinforcement learning.

- Pairwise Ranking: Compares outputs in pairs to improve model ranking capabilities.

- Knowledge Grounding: Incorporates external knowledge to improve factual accuracy.

These methods have real-world applications in domains like customer service, healthcare, search engines, and recommender systems. By leveraging these techniques, businesses can deploy highly specialized and efficient AI models to meet diverse challenges.